To use all functions of this page, please activate cookies in your browser.

my.chemeurope.com

With an accout for my.chemeurope.com you can always see everything at a glance – and you can configure your own website and individual newsletter.

- My watch list

- My saved searches

- My saved topics

- My newsletter

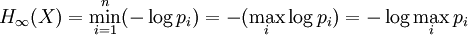

Min-entropyIn probability theory or information theory, the min-entropy of a discrete random event x with possible states (or outcomes) 1... n and corresponding probabilities p1... pn is Product highlightThe base of the logarithm is just a scaling constant; for a result in bits, use a base-2 logarithm. Thus, a distribution has a min-entropy of at least b bits if no possible state has a probabilty greater than 2-b. The min-entropy is always less than or equal to the Shannon entropy; it is equal when all the probabilities pi are equal. min-entropy is important in the theory of randomness extractors. The notation k=1 is Shannon entropy. As k is increased, more weight is given to the larger probabilities, and in the limit as k→∞, only the largest p_i has any effect on the result. See also

|

| This article is licensed under the GNU Free Documentation License. It uses material from the Wikipedia article "Min-entropy". A list of authors is available in Wikipedia. |

derives from a parameterized family of Shannon-like entropy measures,

derives from a parameterized family of Shannon-like entropy measures, ![H_k(X) = -\log \sqrt[k-1]{\begin{matrix}\sum_i (p_i)^k\end{matrix}}](images/math/6/2/9/629ac55b47b890aae32f90f3e1464496.png)