To use all functions of this page, please activate cookies in your browser.

my.chemeurope.com

With an accout for my.chemeurope.com you can always see everything at a glance – and you can configure your own website and individual newsletter.

- My watch list

- My saved searches

- My saved topics

- My newsletter

NegentropyIn 1943 Erwin Schrödinger used the concept of “negative entropy” in his popular-science book What is life? [1]. Later, Léon Brillouin shortened the expression to a single word, negentropy. [2][3] Schrödinger introduced the concept when explaining that a living system exports entropy in order to maintain its own entropy at a low level (see entropy and life). By using the term negentropy, he could express this fact in a more "positive" way: a living system imports negentropy and stores it. In a note to What is Life? Schrödinger explains his usage of this term.

In 1974, Albert Szent-Györgyi proposed replacing the term negentropy with syntropy, a term which may have originated in the 1940s with the Italian mathematician Luigi Fantappiè, who attempted to construct a unified theory of the biological and physical worlds. (This attempt has not gained renown or borne great fruit.) Buckminster Fuller attempted to popularize this usage, though negentropy still remains common. Product highlight

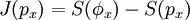

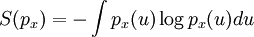

Information theoryIn information theory and statistics, negentropy is used as a measure of distance to normality. [4][5][6] Consider a signal with a certain distribution. If the signal is Gaussian, the signal is said to have a normal distribution. Negentropy is always positive, is invariant by any linear invertible change of coordinates, and vanishes iff the signal is Gaussian. Negentropy is defined as where S(φx) stands for the Gaussian density with the same mean and variance as px and S(px) is the differential entropy: Negentropy is used in statistics and signal processing. It is related to network entropy, which is used in Independent Component Analysis. [7][8] Negentropy can be understood intuitively as the information that can be saved when representing px in an efficient way; if px where a random variable (with Gaussian distribution) with the same mean and variance, would need the maximum length of data to be represented, even in the most efficient way. Since px is less random, then something about it is known beforehand, it contains less unknown information, and needs less length of data to be represented in an efficient way. Organization theoryIn risk management, negentropy is the force that seeks to achieve effective organizational behavior and lead to a steady predictable state.[9] Notes

See also

Categories: Thermodynamic entropy | Entropy and information |

||||

| This article is licensed under the GNU Free Documentation License. It uses material from the Wikipedia article "Negentropy". A list of authors is available in Wikipedia. |